AI traffic moves faster when its local

AI traffic does not behave like normal internet traffic. Traditional transit models were built for north–south traffic. That means users pulling content from somewhere else.

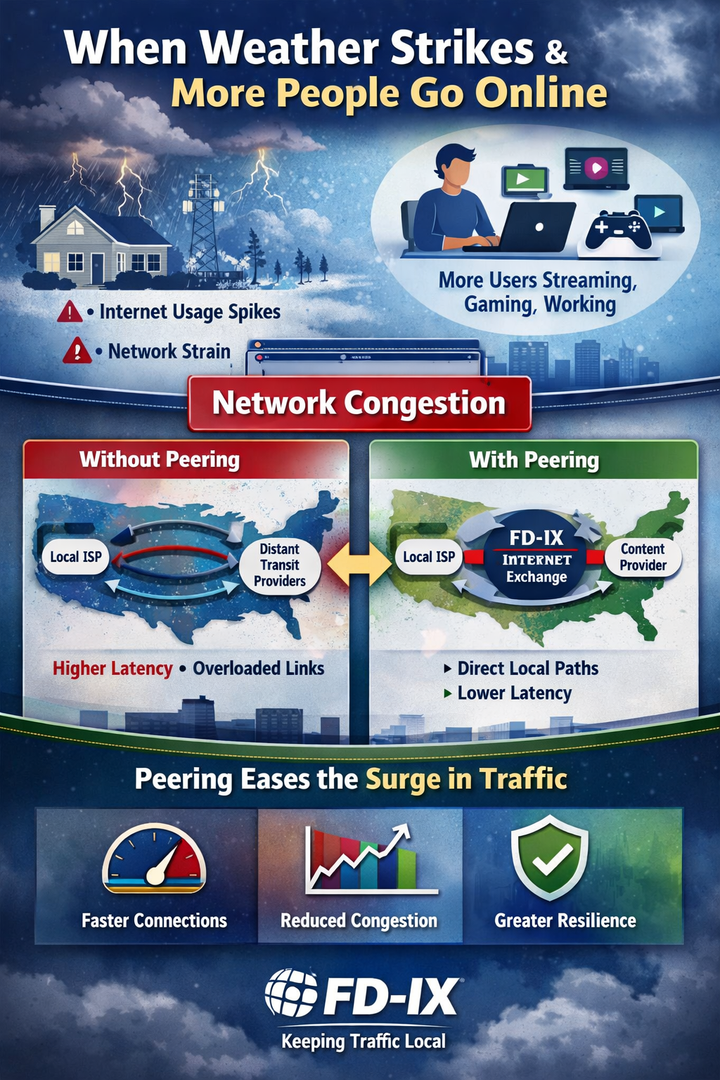

AI-generated network traffic differs significantly from conventional internet traffic. Traditional transit models are designed for north–south flows, where users retrieve content from external sources. For example, residential users stream Netflix and businesses access Microsoft 365. In these cases, traffic exits the local network, traverses a transit provider, and reaches a remote destination. This model is effective because most flows are bursty and asymmetric, characterized by heavy downloads, light uploads, and predictable patterns.

AI workloads disrupt this established pattern.

AI training clusters generate considerable east–west traffic, which refers to server-to-server communication within or between data centers. In these environments, GPUs exchange gradients, storage nodes supply training data, and parameter servers synchronize models. This traffic prioritizes proximity and bandwidth rather than traversing the capital I Internet.

Transit providers design and price their services for aggregate internet access, assuming oversubscription and that traffic peaks will average out across many users. In contrast, AI workloads generate sustained and synchronized loads. For instance, when 200 GPUs simultaneously start a training epoch, the resulting traffic is steady and high. In this context, oversubscription no longer provides efficiency; instead, it becomes a major bottleneck.

Latency presents an additional challenge.

Traditional transit adds hops. Each hop adds delay and jitter. For web browsing, a few extra milliseconds rarely matter. For distributed AI training, small delays compound. Gradient updates wait. Synchronization slows. Training time stretches. When time equals money, milliseconds equal dollars.

Cost considerations also arise.

Transit charges are often based on 95th percentile usage. AI workloads pin links near capacity for long periods. That means your 95th percentile is basically your peak. The billing model assumes bursts. AI gives it a plateau. The invoice shows that.AI workloads are also sensitive to network asymmetry. Traditional internet traffic models assume predominantly downstream demand, whereas AI clusters generate balanced upstream and downstream traffic. High upstream utilization might cause congestion in network segments primarily designed for downloads, resulting in practical imbalances despite theoretical symmetry.

Routing policy brings in extra complexities.

Transit providers optimize for reachability and scale. AI operators optimize for deterministic performance. They want short, fixed paths between known endpoints. Transit routing may shift based on cost or policy. AI engineers prefer stable paths with minimal variance. Consistency matters more than global reach.

A local interconnection fabric such as FD-IX radically changes these conditions.

Instead of hairpinning through upstream transit, AI operators can peer directly with storage providers, research networks, cloud on-ramps, and data partners. Traffic stays local. Fewer hops. Lower latency. Predictable performance. Costs align with actual port capacity rather than burst-based billing tricks.

AI traffic is defined by high volume, lateral movement, and sustained duration, whereas transit networks are optimized for distributed, bursty, north–south flows. This mismatch leads to congestion, increased costs, and performance variability.

The network architecture suitable for streaming video differs essentially from that required for distributed AI training clusters. AI workloads do not simply require increased bandwidth; they represent an entirely distinct traffic pattern. When infrastructure is adapted to adjust for these patterns, operational efficiency improves significantly.

The lesson is simple. When machines talk to machines at scale, distance matters. Architecture matters. And the old assumptions about internet traffic start to look like they belong to a different era. FD-IX is at the leading edge of integrating AI into your traffic flows.